目录

Microsoft.Extensions.AI 是一个强大的 .NET AI 集成库,专注于为开发者提供丰富的功能,以便在 .NET 应用程序中轻松实现 AI 功能的集成。通过该库,用户可以方便地扩展和增强应用程序的智能化能力。本指南将深入介绍其主要特性及具体的使用方法,帮助开发者快速上手并充分利用 Ollama 提供的强大工具集。

先决条件

在开始之前,请确保满足以下条件:

- 安装 .NET 8 SDK

- 安装 Visual Studio 或 VS Code

- 安装 Ollama

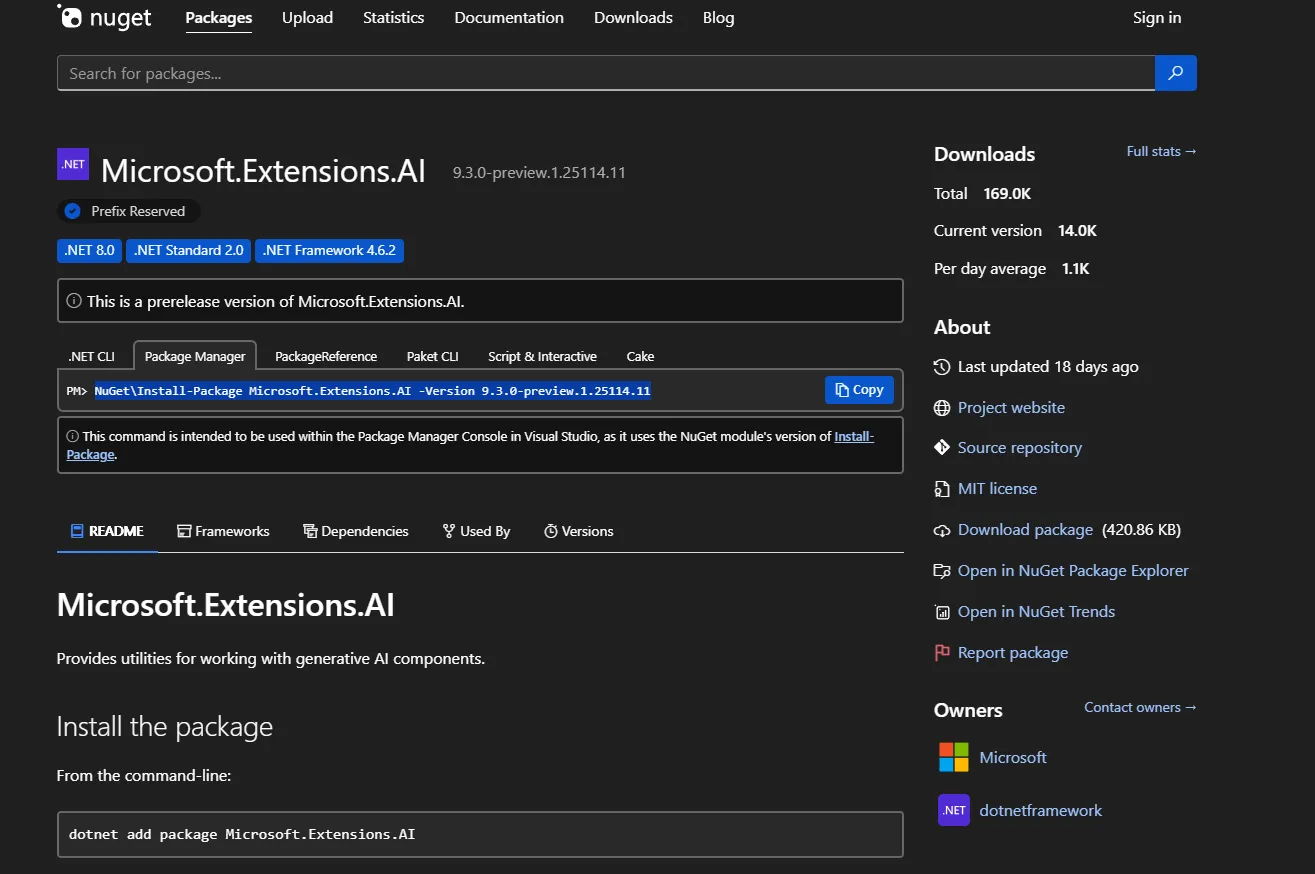

Nuget 安装包

PowerShellNuGet\Install-Package [Microsoft.Extensions.AI](http://Microsoft.Extensions.AI) -Version 9.3.0-preview.1.25114.11 NuGet\Install-Package Microsoft.Extensions.AI.Ollama -Version 9.3.0-preview.1.25114.11

快速开始

模型下载

首先,使用 Ollama 下载必要的模型:

Bashollama pull deepseek-r1:1.5b # 聊天模型

ollama pull all-minilm:latest # 嵌入模型

基本示例集合

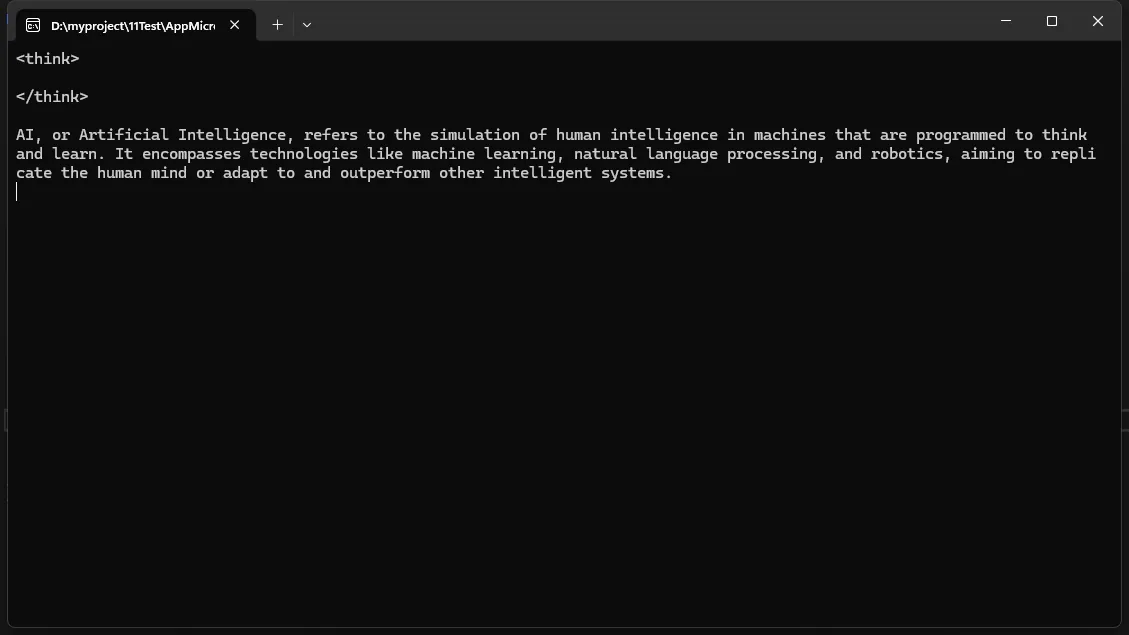

基础聊天功能

C#using Microsoft.Extensions.AI;

namespace AppMicrosoftAI

{

internal class Program

{

static async Task Main(string[] args)

{

var endpoint = "http://localhost:11434/";

var modelId = "deepseek-r1:1.5b";

IChatClient client = new OllamaChatClient(endpoint, modelId: modelId);

var result = await client.GetResponseAsync("What is AI?");

Console.WriteLine(result.Message);

Console.ReadLine();

}

}

}

ChatResponse 类的说明:

Choices(消息选择列表):- 类型:

IList<ChatMessage> - 包含聊天响应的消息列表

- 如果有多个响应选项,它们都会存储在这个列表中

- 可以通过

Message属性直接访问第一个选项

- 类型:

Message(首选消息):- 返回

Choices列表中的第一条消息 - 如果没有可用的选项,将抛出

InvalidOperationException - 使用

[JsonIgnore]特性标记,表示在 JSON 序列化时忽略

- 返回

ResponseId(响应标识):- 类型:

string - 聊天响应的唯一标识符

- 类型:

ChatThreadId(聊天线程标识):- 类型:

string - 表示聊天线程的状态标识符

- 在某些聊天实现中,可用于保持对话上下文

- 可以在后续的聊天请求中使用,以继续对话

- 类型:

ModelId(模型标识):- 类型:

string - 标识生成响应的 AI 模型

- 类型:

CreatedAt(创建时间):- 类型:

DateTimeOffset - 聊天响应创建的时间戳

- 类型:

FinishReason(完成原因):- 类型:

ChatFinishReason - 指示响应生成停止的原因(例如:完成、达到长度限制)

- 类型:

Usage(使用情况):- 类型:

UsageDetails - 包含资源使用details(可能是令牌数、处理时间等)

- 类型:

RawRepresentation(原始表示):- 类型:

object - 存储原始的底层响应对象

- 对调试或访问特定实现的细节很有用

- 使用

[JsonIgnore]特性标记

- 类型:

AdditionalProperties(额外属性):- 类型:

AdditionalPropertiesDictionary - 允许存储未在类中显式定义的额外属性

- 类型:

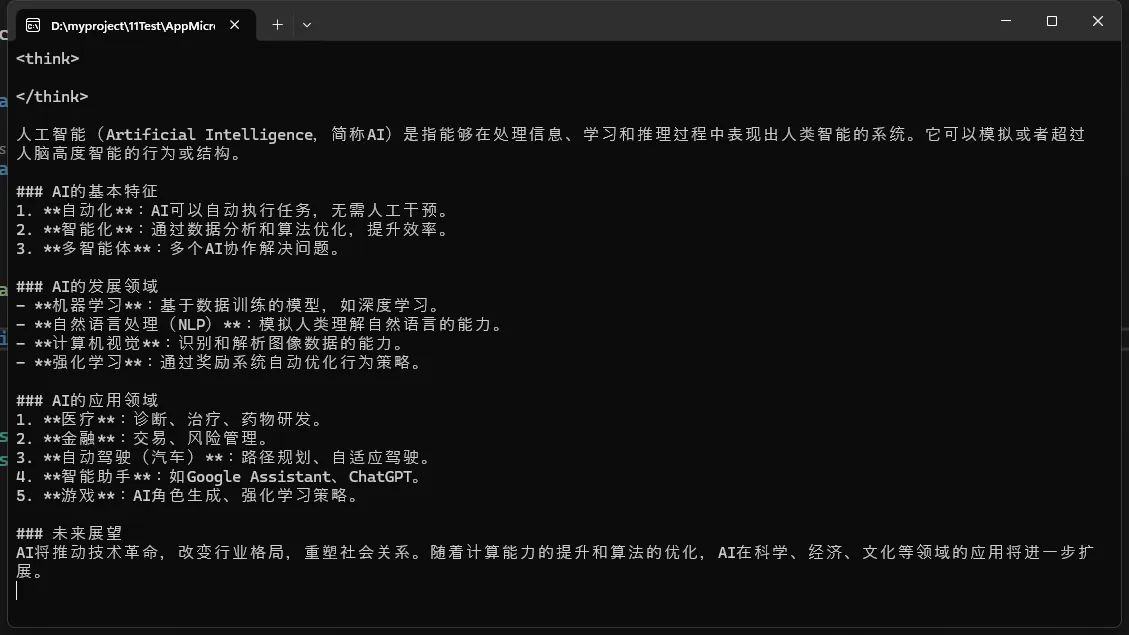

对话历史管理

C#using Microsoft.Extensions.AI;

namespace AppMicrosoftAI

{

internal class Program

{

static async Task Main(string[] args)

{

var endpoint = "http://localhost:11434/";

var modelId = "deepseek-r1:1.5b";

IChatClient client = new OllamaChatClient(endpoint, modelId: modelId);

List<ChatMessage> conversation =

[

new(ChatRole.System, "You are a helpful AI assistant"),

new(ChatRole.User, "What is AI?")

];

Console.WriteLine(await client.GetResponseAsync(conversation));

Console.ReadLine();

}

}

}

流式响应处理

C#using Microsoft.Extensions.AI;

namespace AppMicrosoftAI

{

internal class Program

{

static async Task Main(string[] args)

{

var endpoint = "http://localhost:11434/";

var modelId = "deepseek-r1:1.5b";

IChatClient client = new OllamaChatClient(endpoint, modelId: modelId);

await foreach (var update in client.GetStreamingResponseAsync("什么是AI?"))

{

Console.Write(update);

}

Console.WriteLine();

Console.ReadKey();

}

}

}

中间件支持

- 缓存中间件:提供响应缓存

- OpenTelemetry:性能监控和追踪

- 工具调用中间件:扩展 AI 功能

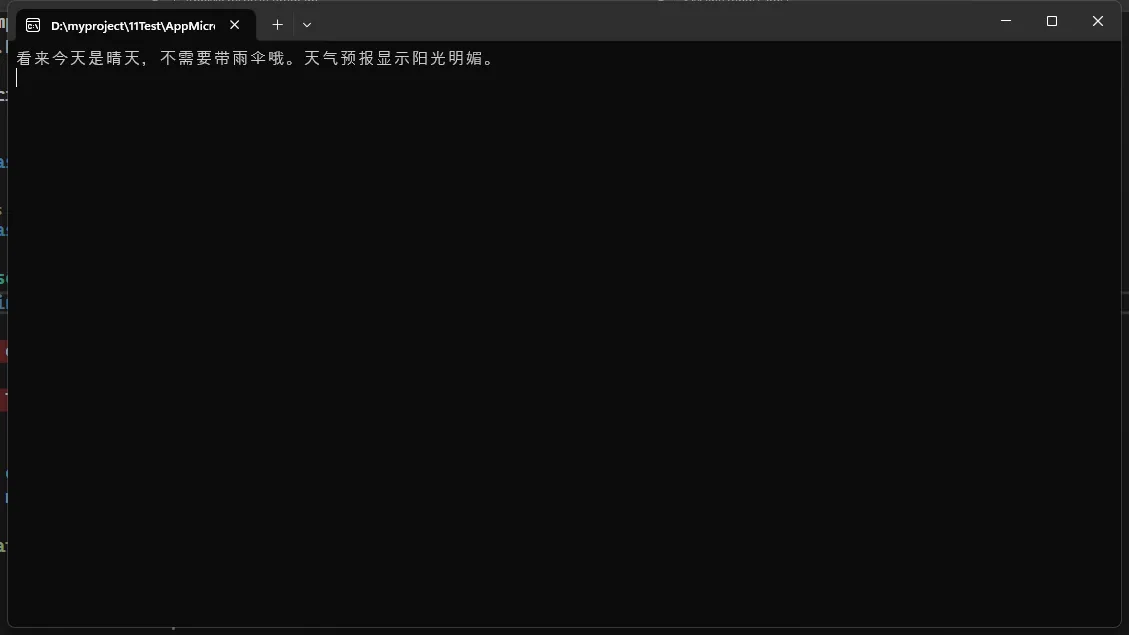

工具调用

C#using System.ComponentModel;

using Microsoft.Extensions.AI;

namespace AppMicrosoftAI

{

internal class Program

{

static async Task Main(string[] args)

{

[Description("Gets the weather")]

string GetWeather() => Random.Shared.NextDouble() > 0.5 ? "It's sunny" : "It's raining";

var chatOptions = new ChatOptions

{

Tools = [AIFunctionFactory.Create(GetWeather)]

};

var endpoint = "http://localhost:11434/";

var modelId = "qwen2.5:3b";

IChatClient client = new OllamaChatClient(endpoint, modelId: modelId)

.AsBuilder()

.UseFunctionInvocation()

.Build();

Console.WriteLine(await client.GetResponseAsync("你需要雨伞吗?", chatOptions));

Console.ReadKey();

}

}

}

使用提示缓存中间件

C#using System.ComponentModel;

using Microsoft.Extensions.AI;

using Microsoft.Extensions.Caching.Distributed;

using Microsoft.Extensions.Caching.Memory;

using Microsoft.Extensions.Options;

namespace AppMicrosoftAI

{

internal class Program

{

static async Task Main(string[] args)

{

var endpoint = "http://localhost:11434/";

var modelId = "deepseek-r1:1.5b";

var options = Options.Create(new MemoryDistributedCacheOptions());

IDistributedCache cache = new MemoryDistributedCache(options);

IChatClient client = new OllamaChatClient(endpoint, modelId: modelId)

.AsBuilder()

.UseDistributedCache(cache)

.Build();

string[] prompts = ["What is AI?", "What is .NET?", "What is AI?"];

foreach (var prompt in prompts)

{

await foreach (var message in client.GetStreamingResponseAsync(prompt))

{

Console.Write(message);

}

Console.WriteLine();

}

Console.ReadKey();

}

}

}

C#using System.ComponentModel;

using System.Diagnostics;

using Microsoft.Extensions.AI;

using Microsoft.Extensions.Caching.Distributed;

using Microsoft.Extensions.Caching.Memory;

using Microsoft.Extensions.Options;

using OpenTelemetry.Trace;

namespace AppMicrosoftAI

{

internal class Program

{

static async Task Main(string[] args)

{

var sourceName = Guid.NewGuid().ToString();

var activities = new List<Activity>();

var tracerProvider = OpenTelemetry.Sdk.CreateTracerProviderBuilder()

.AddInMemoryExporter(activities)

.AddSource(sourceName)

.Build();

[Description("Gets the weather")]

string GetWeather() => Random.Shared.NextDouble() > 0.5 ? "It's sunny" : "It's raining";

var chatOptions = new ChatOptions

{

Tools = [AIFunctionFactory.Create(GetWeather)]

};

var endpoint = "http://localhost:11434/";

var modelId = "qwen2.5:3b";

var options = Options.Create(new MemoryDistributedCacheOptions());

IDistributedCache cache = new MemoryDistributedCache(options);

IChatClient client = new OllamaChatClient(endpoint, modelId: modelId)

.AsBuilder()

.UseFunctionInvocation()

.UseOpenTelemetry(sourceName: sourceName, configure: o => o.EnableSensitiveData = true)

.UseDistributedCache(cache)

.Build();

List<ChatMessage> conversation =

[

new(ChatRole.System, "You are a helpful AI assistant"),

new(ChatRole.User, "需要带雨伞吗?")

];

Console.WriteLine(await client.GetResponseAsync("需要带雨伞吗?", chatOptions));

Console.ReadKey();

}

}

}

文本嵌入

C#using System.ComponentModel;

using Microsoft.Extensions.AI;

namespace AppMicrosoftAI

{

internal class Program

{

static async Task Main(string[] args)

{

var endpoint = "http://localhost:11434/";

var modelId = "all-minilm:latest";

IEmbeddingGenerator<string, Embedding<float>> generator = new OllamaEmbeddingGenerator(endpoint, modelId: modelId);

var embedding = await generator.GenerateEmbeddingVectorAsync("What is AI?");

Console.WriteLine(string.Join(", ", embedding.ToArray()));

Console.ReadKey();

}

}

}

最佳实践

- 使用依赖注入管理 AI 客户端

- 实现适当的错误处理

- 管理对话历史

- 利用中间件增强功能

结论

Microsoft.Extensions.AI - Ollama 提供了强大、灵活的 AI 集成方案,使 .NET 开发者能轻松构建智能应用程序。

本文作者:技术老小子

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

目录