目录

As a C# developer, do you often face this frustration: your system needs to handle massive concurrent tasks, but traditional thread pool solutions either lack performance or consume too much memory? Or when using BlockingCollection, you find it outdated and lacking the elegance of modern asynchronous programming?

This article will completely solve this problem through a complete Channel task processor implementation, helping you master the best practices of modern C# high-concurrency programming. Whether it's API request processing, bulk data import, or message queue consumption, this solution can boost your system performance by 3-5 times!

🔍 Deep Problem Analysis: Why Traditional Solutions Aren't Enough?

Three Major Pain Points of Traditional Solutions

1. Thread Pool Abuse Leading to Resource Waste

C#// ❌ Traditional approach: Creating new threads for each task

Task.Run(() => ProcessTask()); // High thread overhead, frequent context switching

2. BlockingCollection Performance Bottleneck

C#// ❌ Old-style synchronous solution

BlockingCollection<TaskItem> queue = new(); // Blocking, no async support

3. Lack of Elegant Lifecycle Management

- No reasonable timeout mechanism

- Lack of metrics monitoring

- Easy data loss during shutdown

💡 Channel Solution: The Perfect Modern Solution

Channel is a high-performance, async-first producer-consumer pattern implementation introduced in .NET Core. Compared to traditional solutions, it has the following advantages:

- 🚀 High Performance: Zero-allocation async operations

- 🛡️ Memory Safe: Bounded queues prevent memory overflow

- 🎯 Async-First: Perfect support for async/await pattern

- 📊 Observability: Built-in backpressure and flow control

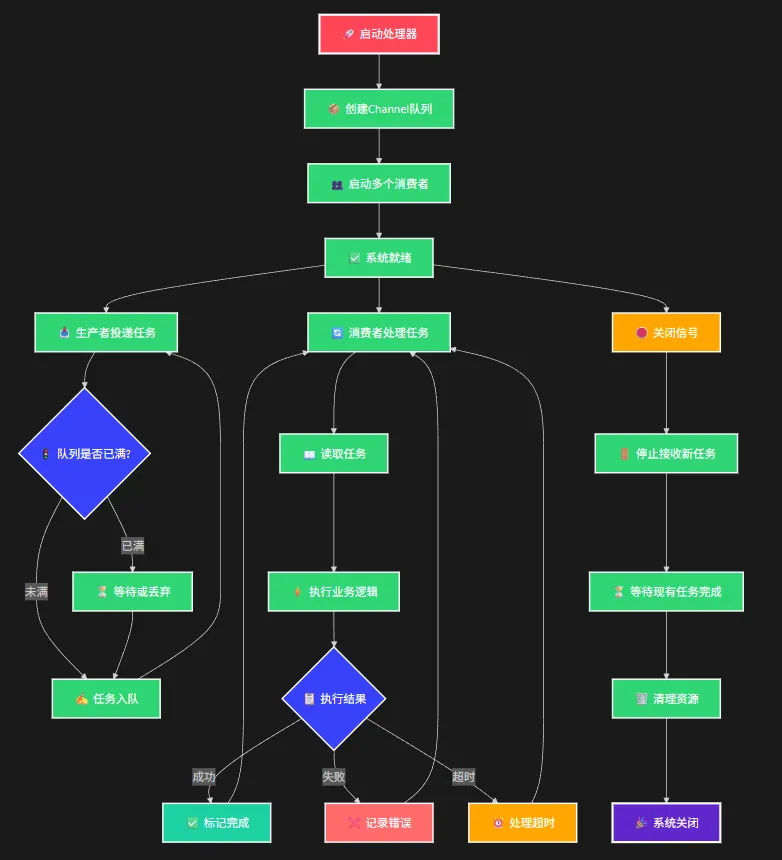

🔥 Core Implementation: Production-Grade Channel Task Processor

📋 Step 1: Configuration and Metrics System

C#// 📊 Task processor configuration - Production environment tunable

public class ChannelTaskProcessorOptions

{

public int Capacity { get; set; } = 1000; // Queue capacity

public int ConsumerCount { get; set; } = Environment.ProcessorCount; // Consumer count

public TimeSpan GracefulShutdownTimeout { get; set; } = TimeSpan.FromSeconds(30);

public TimeSpan TaskTimeout { get; set; } = TimeSpan.FromMinutes(5);

public bool EnableMetrics { get; set; } = true; // Performance monitoring switch

public BoundedChannelFullMode FullMode { get; set; } = BoundedChannelFullMode.Wait;

}

// 📈 Thread-safe performance metrics collection

public class ProcessingMetrics

{

private long _totalEnqueued;

private long _totalProcessed;

private long _totalFailed;

private readonly ConcurrentDictionary<string, long> _consumerMetrics = new();

public long PendingCount => _totalEnqueued - _totalProcessed - _totalFailed;

public void IncrementProcessed(string consumerId)

{

Interlocked.Increment(ref _totalProcessed);

_consumerMetrics.AddOrUpdate(consumerId, 1, (key, value) => value + 1);

}

// ... Other metrics methods

}

⚠️ Key Configuration Notes:

Capacity: Set based on memory size, recommend 1000-10000ConsumerCount: Usually set to CPU core count, can increase appropriately for IO-intensive tasksFullMode: Recommend usingWaitmode in production to avoid task loss

🎯 Step 2: Core Processor Implementation

C#public class ChannelTaskProcessor : IDisposable

{

private readonly Channel<TaskItem> _channel;

private readonly ChannelWriter<TaskItem> _writer;

private readonly ChannelReader<TaskItem> _reader;

private readonly List<Task> _consumerTasks = new();

public ChannelTaskProcessor(IOptions<ChannelTaskProcessorOptions> options)

{

_options = options.Value;

// 🔧 Create bounded channel - Performance critical configuration

var channelOptions = new BoundedChannelOptions(_options.Capacity)

{

FullMode = _options.FullMode,

SingleReader = false, // Support multiple consumers

SingleWriter = false, // Support multiple producers

AllowSynchronousContinuations = false // Avoid thread hijacking, improve performance

};

_channel = Channel.CreateBounded<TaskItem>(channelOptions);

_writer = _channel.Writer;

_reader = _channel.Reader;

}

// 🚀 Start multiple consumer coroutines

public async Task StartAsync(CancellationToken cancellationToken = default)

{

for (int i = 0; i < _options.ConsumerCount; i++)

{

string consumerId = $"Consumer-{i:D2}";

var consumerTask = StartConsumer(consumerId, cancellationToken);

_consumerTasks.Add(consumerTask);

}

_isStarted = true;

}

}

⚡ Step 3: High-Performance Consumer Implementation

C#// 👨💼 Consumer work loop - Async-first design

private async Task StartConsumer(string consumerId, CancellationToken cancellationToken)

{

try

{

// 🔄 Use async enumerator, zero-allocation traversal

await foreach (var task in _reader.ReadAllAsync(cancellationToken))

{

await ProcessTaskWithTimeout(task, consumerId, cancellationToken);

}

}

catch (OperationCanceledException) when (cancellationToken.IsCancellationRequested)

{

_logger.LogInformation("🛑 Consumer {ConsumerId} stopped safely", consumerId);

}

}

// ⏱️ Task processing with timeout control - Prevent task deadlock

private async Task ProcessTaskWithTimeout(TaskItem task, string consumerId, CancellationToken cancellationToken)

{

using var timeoutCts = CancellationTokenSource.CreateLinkedTokenSource(cancellationToken);

timeoutCts.CancelAfter(_options.TaskTimeout);

var stopwatch = Stopwatch.StartNew();

try

{

await ProcessTask(task, timeoutCts.Token);

stopwatch.Stop();

_metrics.IncrementProcessed(consumerId);

TaskProcessed?.Invoke(this, new TaskProcessedEventArgs(task, consumerId, stopwatch.Elapsed));

}

catch (OperationCanceledException) when (timeoutCts.Token.IsCancellationRequested)

{

_logger.LogWarning("⏰ Task {TaskId} processing timeout", task.Id);

_metrics.IncrementFailed();

}

catch (Exception ex)

{

_metrics.IncrementFailed();

TaskFailed?.Invoke(this, new TaskFailedEventArgs(task, consumerId, ex));

}

}

🎯 Core Optimization Tips:

- Async Enumerator:

ReadAllAsyncperforms better than while loops - Timeout Control: Prevent single task from blocking entire consumer

- Event-Driven: Achieve loosely coupled monitoring and logging through events

🔄 Step 4: Producer and Graceful Shutdown

C#// 📥 Async enqueue - Support backpressure control

public async Task<bool> EnqueueTaskAsync(TaskItem task, CancellationToken cancellationToken = default)

{

try

{

await _writer.WriteAsync(task, cancellationToken);

_metrics.IncrementEnqueued();

return true;

}

catch (OperationCanceledException)

{

return false; // Graceful cancellation handling

}

}

// 🛑 Graceful shutdown - Zero data loss

public async Task StopAsync(CancellationToken cancellationToken = default)

{

// 1️⃣ Stop accepting new tasks

_writer.Complete();

// 2️⃣ Wait for existing tasks to complete

using var timeoutCts = CancellationTokenSource.CreateLinkedTokenSource(cancellationToken);

timeoutCts.CancelAfter(_options.GracefulShutdownTimeout);

try

{

await Task.WhenAll(_consumerTasks).WaitAsync(timeoutCts.Token);

_logger.LogInformation("✅ All tasks completed safely");

}

catch (OperationCanceledException)

{

_logger.LogWarning("⏰ Graceful shutdown timeout, forcing stop");

_cts.Cancel(); // Force cancel remaining tasks

}

}

🎮 Practical Applications: Three Typical Scenarios

🌐 Scenario 1: API Batch Processing

C#// 🔥 High-concurrency API request processing

public class ApiTaskProcessor : ChannelTaskProcessor

{

private readonly HttpClient _httpClient;

protected override async Task ProcessTask(TaskItem task, CancellationToken cancellationToken)

{

var apiUrl = task.Properties["ApiUrl"].ToString();

var response = await _httpClient.GetAsync(apiUrl, cancellationToken);

// Process response data

var content = await response.Content.ReadAsStringAsync();

_logger.LogInformation("✅ API call completed: {Url} - Status: {Status}", apiUrl, response.StatusCode);

}

}

📊 Scenario 2: Bulk Data Import

C#// 📈 Database bulk write optimization

public class DataImportProcessor : ChannelTaskProcessor

{

private readonly IDbConnection _connection;

protected override async Task ProcessTask(TaskItem task, CancellationToken cancellationToken)

{

var batchData = JsonSerializer.Deserialize<DataBatch>(task.Data);

// Use bulk insert to improve performance

await _connection.ExecuteAsync(

"INSERT INTO Records (Id, Data) VALUES (@Id, @Data)",

batchData.Records);

_logger.LogInformation("💾 Bulk import completed: {Count} records", batchData.Records.Count);

}

}

📧 Scenario 3: Message Queue Processing

C#// 📮 Message queue consumer

public class MessageQueueProcessor : ChannelTaskProcessor

{

protected override async Task ProcessTask(TaskItem task, CancellationToken cancellationToken)

{

var message = JsonSerializer.Deserialize<QueueMessage>(task.Data);

// Dispatch processing based on message type

switch (message.Type)

{

case "email":

await SendEmailAsync(message, cancellationToken);

break;

case "sms":

await SendSmsAsync(message, cancellationToken);

break;

}

}

}

📊 Performance Monitoring and Tuning

Real-time Metrics Monitoring

C#// 📊 Real-time performance metrics monitoring

private async Task StartMetricsReporting(CancellationToken cancellationToken)

{

using var timer = new PeriodicTimer(TimeSpan.FromSeconds(30));

while (await timer.WaitForNextTickAsync(cancellationToken))

{

var successRate = (double)_metrics.TotalProcessed / _metrics.TotalEnqueued * 100;

_logger.LogInformation(

"📊 Performance Metrics - Success Rate:{Rate:F1}% Queue Backlog:{Pending} Avg Time:{AvgTime:F0}ms",

successRate, _metrics.PendingCount, GetAverageProcessingTime());

}

}

🔧 Performance Tuning Recommendations:

- Monitor queue backlog, adjust consumer count timely

- Optimize concurrency based on CPU and IO usage

- Regularly analyze failed tasks, optimize timeout configuration

💎 Three "Collection-Worthy" Technical Points

- Async-First Principle: Use

async/awaitinstead ofTask.Run, avoid thread pool abuse - Backpressure Control Mechanism: Automatically implement flow control through bounded Channel, prevent memory overflow

- Graceful Shutdown Pattern: Stop producers first, then wait for consumers to complete, ensure zero data loss

🎯 Summary and Outlook

Through the complete implementation in this article, we solved three core problems of C# high-concurrency task processing: performance bottlenecks, resource management, and observability. This Channel task processor not only delivers excellent performance but also has production-grade stability and scalability.

Key Takeaways:

- Mastered best practices of modern C# asynchronous programming

- Learned to build highly available task processing systems

- Understood the importance of performance monitoring and graceful shutdown

🤔 Discussion Question: In your projects, what other scenarios can apply Channel to improve performance? What tricky concurrency processing problems have you encountered?

💬 Interactive Discussion: Welcome to share your practical application experience in the comments, or raise technical challenges you've encountered. Let's explore optimal solutions together!

Please share with more peers if you find it useful 🔄, let more C# developers benefit from this high-performance solution!

Follow me for more C# advanced programming tips and best practice sharing 🚀

Related Information

Files shared via cloud storage: AppChannelTaskProcessor.zip Link: https://pan.baidu.com/s/1pV32UNMf4_wY9q1o3WWxWA?pwd=wj79 Extract code: wj79 --Shared by Baidu Cloud Super Member v9

本文作者:技术老小子

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!